Contrary to popular belief, water has a place in tomorrow’s data centers.

Data center efficiency continues to be a hot topic, and few would argue that cooling efficiency is a significant factor in data center power usage effectiveness (PUE).

Previously, we noted that the rapid and widespread adoption of power-hungry technologies like artificial intelligence, high-performance computing, and hyperscale cloud deployments are continuing to put demands on data center deployments’ already increasing size and power density - some in the neighborhood of 1,000W/SF or 75+ kW/rack.

The GPUs and CPUs that power these resource-intensive applications can collectively produce as much as 350% more heat than previous generations, challenging data center operators to find new and more efficient ways of rapidly cooling data halls – especially in legacy facilities not designed or equipped to handle high densities.

For example, previous generations of CPUs would not exceed 130W, whereas max power consumption on an NVIDIA Quadro RTX 8000 GPU is 295W. A Dell PowerEdge C4140 1U Server has (2) Intel Xeon CPUs, (4) NVIDIA GPUs, and a 2400W power supply. It’s easy to see how these would quickly add up in a rack to create some large power densities.

Can liquid cooling address these new, fast-emerging needs? Here’s what you need to know so you can make the right technical decisions for your organization.

Designing A Liquid Cooling System With Temperature Thresholds In Mind

Practically, water has a much higher thermal capacity than air, creating significant savings opportunities over air cooling systems that require large fans with huge horsepower requirements. The savings extend beyond power usage to other connected components like feeders, switchboards, transformers, generators, and cooling units.

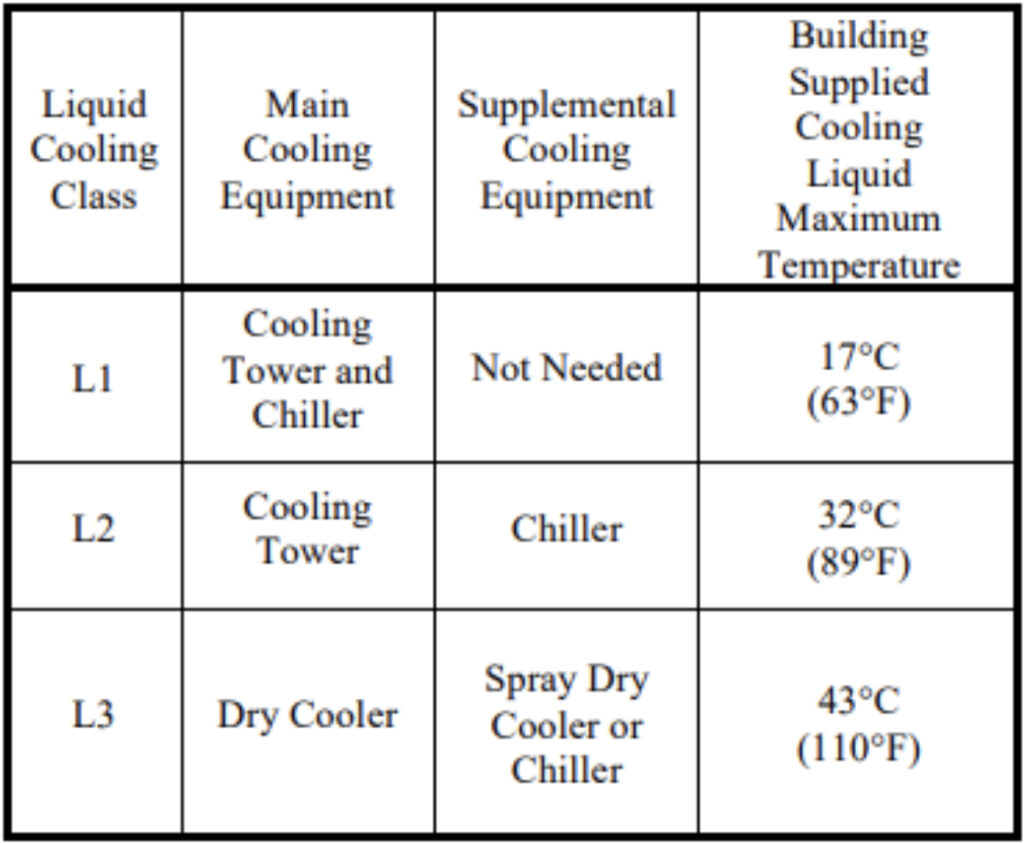

Of course, there are still some considerations that come with liquid cooling implementation as well. ASHRAE TC 9.9 has published guidelines for liquid cooling to accompany its more well-known air-cooled thermal guidelines. Similar to the air-cooled IT equipment (ITE) recommended and allowable equipment class ranges, liquid-cooled ITE has its own class system of W1 through W5. Within classes W1 and W2, chillers are still required as the facility supply water temperature should not exceed 17°C or 27°C (62.6°F or 80.6°F). More specialized equipment (Class W4 and W5) can accept water temperatures upwards to 45°C (113°F).

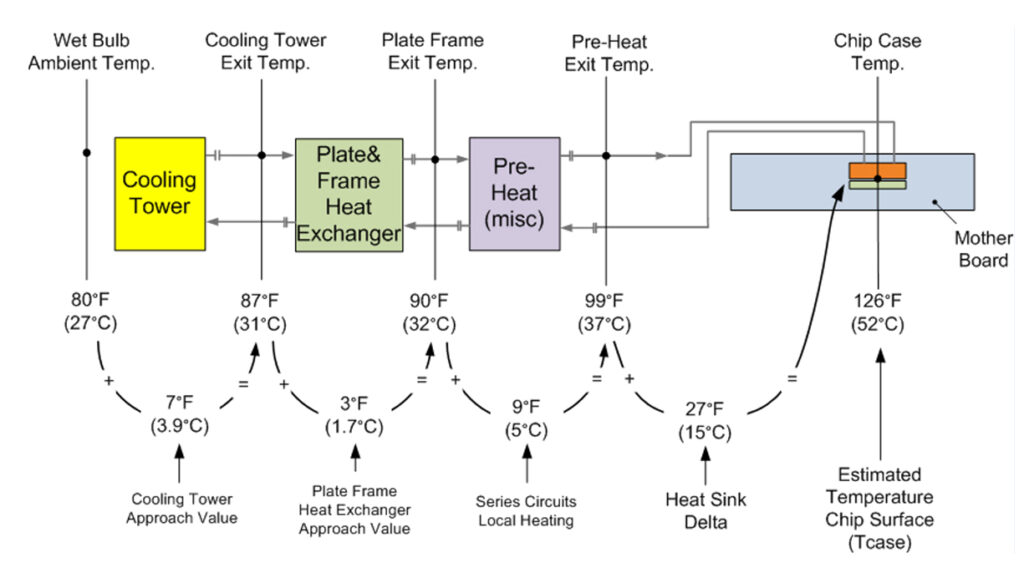

It’s also important to be mindful of “approach” temperatures while designing these systems. Heat exchangers are not perfect at transferring heat, and their effectiveness is characterized by the log mean temperature difference between the hot and cold sides of a heat exchanger. Previous studies from the Lawrence Berkeley National Laboratory have shown that liquid leaving a chip heat sink is as much as 40°F warmer than the supply water entering the data center.

The study assumed the use of a “typical” Intel Xeon CPU commonly used in HPC applications. The critical temperature for the processor is the maximum case temperature, accepted at 77.5°C (172°F). Further, it suggested a ~22°F design margin to account for higher-powered chips, various cooling topologies, ambient design considerations, and emerging technologies that could render the system undersized or unusable in the future.

The study concluded three natural liquid temperature breakpoints depending on the type of heat rejection utilized and the corresponding building supply temperature shown below. This is the main reason why facilities with chilled fluid are better equipped to handle liquid-cooled applications than data centers utilizing direct/indirection evaporation or DX cooling.

Other considerations:

Here are a few other rules of the road to consider when designing, planning, and executing your liquid cooling approach:

Mixing systems:

It’s uncommon to envision a facility that would be 100% liquid-cooled without any air-cooled infrastructure. Most meet-me-rooms (MMRs) and intermediate distribution frames (IDFs) will continue to have air-cooled ITE, and electrical infrastructure like UPSs and PDUs will reject heat. The data center white space itself will still have a large process cooling load – even if the majority of the racks are liquid-cooled - that will still require at least some appreciable volume of air. Many advanced cooling technologies do not remove all of the heat generated by the server, thus still requiring supplemental cooling. Liquid cooling will not eliminate the need for chillers and cooling units.

Filtration:

The most common liquid cooling implementation utilizes a coolant distribution unit (CDU). These may be integral or external to the rack, but they protect the integrity of the technology cooling system (TCS) and maintain separation from the facility process cooling water system (FWS). Facility water loops can be dirty, especially if they are served by an open cooling tower.

The CDU plate-type heat exchanger has very narrow spacing (2-8 mm) that helps drive efficiency. It is necessary to provide endpoint filtration at the FWS side of the CDU and recommended to provide filtration on the TCS side, as well. Non-CDU liquid cooling systems are far less common and should necessitate an even higher level of scrutiny with respect to filtration.

Condensation:

The CDU is capable of monitoring the ambient air dew point condition and elevating the TCS liquid temperature to 2°C above that to prevent condensation. The CDU has several preheating functions that will warm the water before it reaches the chip – where condensation can be catastrophic. Similar to the filtration mentioned above, owners and operators should be especially mindful of the possibility of condensing on equipment without this safeguard in place.

Poor control of humidification systems that would have otherwise resulted in condensation on cooling coils may end up migrating to IT equipment instead. Furthermore, densifying legacy data centers that use depressed chilled water temperatures or high levels of outside air could also prove to be problematic.

Additional infrastructure:

It may be necessary to install separate heat exchangers and pumping loops to segregate liquid cooling systems. Whether driven by technical requirements or best practices, it can create some ambiguity in ownership and maintenance of this additional infrastructure between the facility operator and the client. Where it is physically located also has an impact on access and potentially leasable square footage.

Redundancy and uptime is a further concern. The pump and heat exchanger will have to undergo preventative maintenance at some point or another, but flow to the ITE must be maintained. Like all critical equipment, concurrent maintainability should be addressed and this can be designed for. Similarly, on a transfer to generator, the water must circulate continuously, especially in high-density applications. Arguably pumps should be UPS backed to provide uninterrupted cooling, and the pump motor voltage needs to be coordinated. In any case, the service level agreement between the operator and the end-user needs to clearly state all of these expectations and define who is responsible for what.

Logistics:

The most obvious challenge to implementing a liquid-cooled solution is getting the liquid over to the rack. While it may seem advantageous to have a raised floor to route and conceal piping, it can be challenging to snake it between floor pedestals or visually identify integrity – especially with power and network infrastructure in place.

There is additional hazard potential in open floor tiles in operational data centers. If the piping is sizable, it will likely be prefabricated steel (versus copper) bolted together in the field to avoid onsite welding. This is challenging and time-consuming whether it’s overhead or underfloor. Raised floor tiles are only 24” x 24”, so stringers need to be removed or cut out in order to install long sticks of pipe. This can call into question the floor’s structural integrity, especially when it’s holding up densely loaded cabinets.

Finally, most owners do what they can to keep plumbing out of the data hall. However, the newly installed piping needs to be flushed and filled with hundreds or thousands of gallons of water before it can become operational. This will give operators certain stress and consternation to route hoses from back of house areas into live data hall white space for the purpose of flushing and filling pipe. These steps need to be carefully assessed, planned, and executed to avoid significant disruptions to the pristine computing environment.

Several groups, including Open Compute Project’s subcommittee’s Advanced Cooling Facilities and Advanced Cooling Solutions are developing reference designs for deploying liquid cooling in new and existing facilities.

Is It Right For Your Data Center?

Densification is driving demand for more efficient and effective cooling technologies. As semiconductor manufacturing technology continues to evolve, we will soon be knocking on the door of a 3 nm MOSFET technology node. It’s entirely possible that we reach the upper limits of air-cooling effectiveness or even see regulatory mandates that will force more widespread adoption of liquid cooling in the not-too-distant future. Furthermore, certain designs will require liquid cooling. While it is poised to become the go-to cooling methodology for colocation deployments, it’s imperative to weigh the obvious benefits against the potential downsides.

Brian Medina, PE, MBA, CPM is Director of Strategy and Development for STACK INFRASTRUCTURE